Warning: Story contains strong language

I'm the BBC's first specialist disinformation reporter - and I receive abusive messages on social media daily. Most are too offensive to share unedited. The trigger? My coverage of the impact of online conspiracies and fake news. I expect to be challenged and criticised - but misogynistic hate directed at me has become a very regular occurrence.

Messages are laden with slurs based on gender, and references to rape, beheading and sexual acts. Some are a mish-mash of conspiracy theories - that I'm "Zionist-controlled", that I, myself, am responsible for raping babies. The C-word and F-word are repeatedly used.

It's not just me - from politicians around the world and Love Island stars to frontline doctors, I've been hearing from women subjected to the same kind of hate. New research, shared with the BBC, suggests women are more likely to receive this sort of abuse than men, it's getting worse - and it's often combined with racism and homophobia.

I wanted to understand why this is happening, the threat it poses - and why social media sites, the police and the government aren't doing more about it. So, I set out to make a film for the BBC's Panorama programme.

We set up a fake troll account across the five most popular social media platforms to see whether they are promoting misogynistic hate to such users. Using an AI-generated photograph, we designed our fake troll to be similar to the people who sent me abuse. Our troll engaged with content recommended by the social media platforms, but did not send out any hate.

As part of the programme, think-tank Demos carried out a comprehensive deep dive into abuse received by reality TV contestants, analysing more than 90,000 posts and comments about them. It was perhaps a surprising place to start, but programmes like Love Island serve almost as a microcosm for society, allowing researchers to compare the abuse directed at men and women from different backgrounds. Their popularity also generates a lot of online conversation.

We discovered:

- Our troll account was recommended more and more anti-women content by Facebook and Instagram, some involving sexual violence.

- Female reality TV contestants - including those on this year's Love Island - are disproportionately targeted on social media, with abuse frequently rooted in misogyny and combined with racism.

- Draft proposals from the UN to get the social media companies to better protect women have been shared exclusively with the BBC.

Abusive accounts untouched

Social media companies say they take online hate against women seriously - and they have rules to protect users from abuse. These include suspending, restricting or even shutting down accounts sending hate.

But my experience suggests they often don't. I reported some of the worst messages I've ever received - including threats to come to my house to rape me and commit horrific sexual acts - to Facebook when I received them. But months later, the account remained on Facebook, along with dozens of other Instagram and Twitter accounts sending me abuse.

It turns out my experience is part of a pattern. New research for this programme by the Centre for Countering Digital Hate, shows how 97% of 330 accounts sending misogynistic abuse on Twitter and Instagram remained on the site after being reported.

Twitter and Instagram say they take action when their rules are violated, and closing accounts isn't the only option.

Troll hunting

Curious about who was running accounts sending me - and other women - abuse, I started by looking into the profiles targeting me. Most were men and based in the UK. Everything from calling me a "daft cow" and telling me I needed to "get laid" to threatening to come and find me and violently or sexually attack me, they bombard me with gender-based slurs again and again.

It turns out, they are real people - not bots. One is a Spurs fan, like me. Another likes vegan cooking. One, whose account was anonymous, even gave away his location by tweeting at delivery service Ocado complaining it didn't deliver to his postcode in Great Yarmouth.

I reached out to them - and one called Steve, a van driver in his 60s from the Midlands, agreed to speak to me on the phone. The messages he'd sent me were less offensive than most of the abuse I receive - mainly gender-based slurs.

Like lots of account holders who sent me hate, he is deep into online conspiracy theories. But like others, the abuse he sent me also attacked me for being a woman. At first he told me he didn't think his messages were that bad - but I explained they were just some of many punctuated with abuse streaming into my inbox.

"I probably made a mistake. I'm a pretty fair bloke," he eventually concluded.

He pointed out that he actually receives hate himself online from "people who believe in global warming and that 9/11 happened". They are responding to conspiracy theories that he shares on social media. I had hoped this might help him see why hate wasn't the answer. And I think by the end of our conversation he was coming around to the idea.

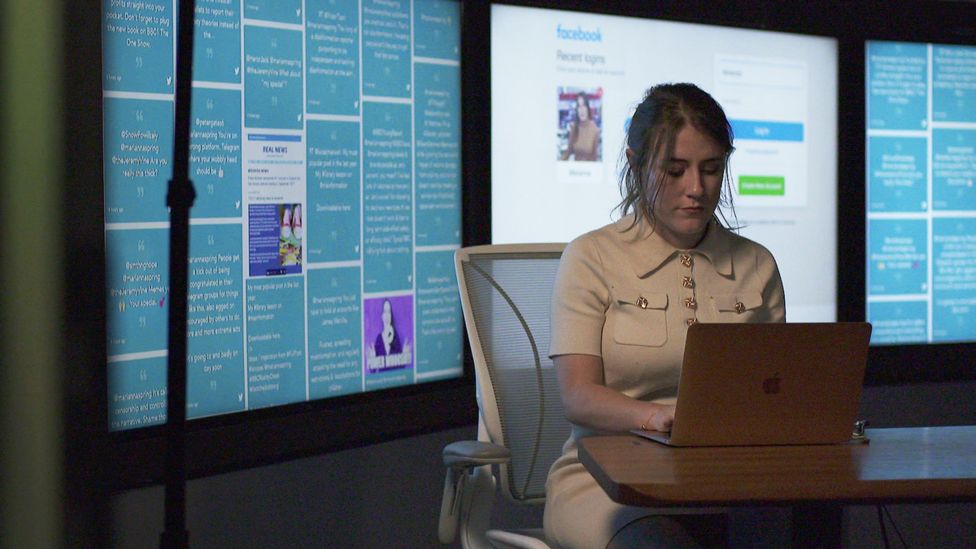

Our conversation got me thinking about what my trolls might be seeing on their social media feeds. I wanted to see whether social media algorithms are pushing more misogyny to accounts similar to those that abuse women online. So I created a fake online persona called Barry and signed him up to the five most popular social media platforms in the UK.

All the main social media companies say they don't promote hate on their platforms and take action to stop it. They each have algorithms that offer us content based on things we've posted, liked or watched in the past. But it's difficult to know what they push to each user.

"One of the only ways to do this is to manually create a profile and seeing the kind of rabbit hole that it might be led down by the platform itself, once you start to follow certain groups or pages," explains social media expert Chloe Colliver, who advised me on the experiment.

She works for the Institute for Strategic Dialogue, looking into extremism and disinformation on social media. She has helped journalists before, and advises me on how I can set up the profiles in an ethical and realistic way - only doing what's necessary to test the algorithms.

Barry's accounts were based on multiple accounts that had sent me abuse. Like my trolls, Barry was mainly interested in anti-vax content and conspiracy theories, and followed a small amount of anti-women content. He also posted some abuse on his profile - so that the algorithms could detect from the start he had an account that used abusive language about women. But unlike my trolls, he didn't message any women directly.

Over two weeks, I logged in every couple of days and followed recommendations, posted to Barry's profiles, liked posts and watched videos.

After just a week, the top recommended pages to follow on both Facebook and Instagram were almost all misogynistic. By the end of the experiment, Barry was pushed more and more anti-women content by these sites - a dramatic increase from when the account had been created. Some of this content involved sexual violence, sharing disturbing memes about sex acts, and content condoning rape, harassment and gendered violence.

They also referenced extreme ideologies. That included the "incel" movement - an internet subculture that encourages men to blame women for problems in their lives. It's been linked to several acts of violence, including recent shootings in Plymouth, in the UK.

"If it were a real person, [Barry] would have been brought into a hateful community full of misogynistic content very, very quickly - within two weeks," says Colliver.

Far from stopping Barry engaging with anti-women content, Facebook and Instagram appear to have promoted it to him. By contrast, there was no anti-women content on TikTok and very little on Twitter. YouTube suggested some videos hostile to women.

Our conversation got me thinking about what my trolls might be seeing on their social media feeds. I wanted to see whether social media algorithms are pushing more misogyny to accounts similar to those that abuse women online. So I created a fake online persona called Barry and signed him up to the five most popular social media platforms in the UK.

All the main social media companies say they don't promote hate on their platforms and take action to stop it. They each have algorithms that offer us content based on things we've posted, liked or watched in the past. But it's difficult to know what they push to each user.

"One of the only ways to do this is to manually create a profile and seeing the kind of rabbit hole that it might be led down by the platform itself, once you start to follow certain groups or pages," explains social media expert Chloe Colliver, who advised me on the experiment.

She works for the Institute for Strategic Dialogue, looking into extremism and disinformation on social media. She has helped journalists before, and advises me on how I can set up the profiles in an ethical and realistic way - only doing what's necessary to test the algorithms.

Barry's accounts were based on multiple accounts that had sent me abuse. Like my trolls, Barry was mainly interested in anti-vax content and conspiracy theories, and followed a small amount of anti-women content. He also posted some abuse on his profile - so that the algorithms could detect from the start he had an account that used abusive language about women. But unlike my trolls, he didn't message any women directly.

Over two weeks, I logged in every couple of days and followed recommendations, posted to Barry's profiles, liked posts and watched videos.

After just a week, the top recommended pages to follow on both Facebook and Instagram were almost all misogynistic. By the end of the experiment, Barry was pushed more and more anti-women content by these sites - a dramatic increase from when the account had been created. Some of this content involved sexual violence, sharing disturbing memes about sex acts, and content condoning rape, harassment and gendered violence.

They also referenced extreme ideologies. That included the "incel" movement - an internet subculture that encourages men to blame women for problems in their lives. It's been linked to several acts of violence, including recent shootings in Plymouth, in the UK.

"If it were a real person, [Barry] would have been brought into a hateful community full of misogynistic content very, very quickly - within two weeks," says Colliver.

Far from stopping Barry engaging with anti-women content, Facebook and Instagram appear to have promoted it to him. By contrast, there was no anti-women content on TikTok and very little on Twitter. YouTube suggested some videos hostile to women.

Love Island: Woman 'disproportionately targeted'

For Panorama, researchers from think-tank Demos analysed messages of abuse directed at reality TV contestants on Love Island (ITV) and Married at First Sight (Channel 4). They found that female reality TV contestants are disproportionately targeted on social media with abuse frequently rooted in misogyny and combined with racism.

While the contestants received mostly positive messages, fashion blogger Kaz Kamwi, 26, and 23-year-old medical student Priya Golpados, told Panorama they also got some disturbing hate-filled messages.

"The most difficult abuse to receive is any that is racially motivated. When you look at me, I am a dark skinned black woman, that's the first thing you see," says Kaz. "And the fact that my family was exposed to that breaks my heart."

Ellen Judson, who led the research for Demos and focuses on social media policy, says reality TV is a great place to start looking at online hate because the genre is so popular with people expressing who they like or don't like.

"We also see that the contestants are a relatively equal mix of men and women - and from lots of different backgrounds - so it gives us an opportunity to analyse those differences in how the public are responding to them."

Demos looked at more than 90,000 online messages about Love Island and Married at First Sight contestants:

- On Twitter, 26% of posts about females were abusive versus 14% of those directed at men

- Abuse targeting women focused primarily on gender and sex (much more so than men), with messages littered with gender-based tropes and talk of sex acts

- Hate intensified when combined with racist language directed at black and Asian female contestants

"People were using explicitly gendered slurs - women being manipulative, women being sneaky, being sexual, women being evil or stupid. Whereas what we saw with men was them being attacked for seemingly not being masculine enough - for being too weak," says Judson. "We also see that women of colour are receiving more pernicious attacks as well based on their race."

I wanted to see what impact this kind of abuse is having, so I spoke to politicians and frontline doctors who use social media to do their jobs. Like me they don't mind being criticised but they do mind when it gets personal.

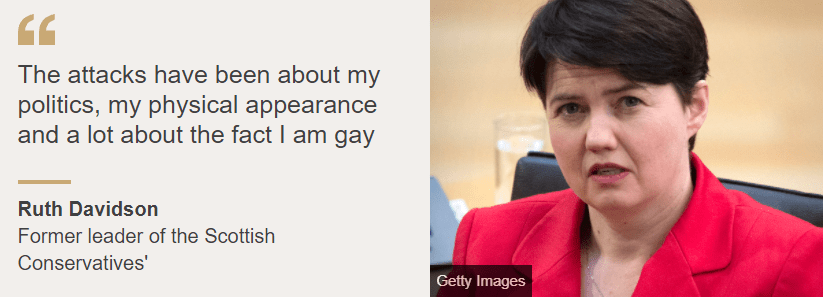

Former leader of the Scottish Conservatives Ruth Davidson fears that abuse targeting women online could turn back the clock when it comes to equality offline.

"The attacks that have come directly to me have been about my politics, some about my physical appearance, a lot has been about the fact that I am gay and a lot of it has been about the fact that I am a woman who has opinions."

There's also concern that online abuse could lead to real world harm.

"You look at your phone and you read somebody who is telling you as an NHS doctor that they want to rape you until you need one of your own ventilators," explains Dr Rachel Clarke, a frontline medic based in Oxford. She's been treating Covid patients during the pandemic and sees using social media as an extension of her duty as a doctor.

That means she has frequently posted warnings about the impact of coronavirus - and encouraged her followers to have a Covid vaccine. It's those tweets in particular that often sparked a wave of misogynistic hate from anti-vaccine activists, not dissimilar to the accounts sending me hate.

"Male doctors that I know will receive abuse online as well. But the volume of abuse is much less. If you're a female doctor, it'll be much more visceral, and it will target you as a woman."

Offline violence

I've been taking part in a major piece of research for the UN's cultural agency Unesco - which looks at the impact of online hate. Lead researcher Julie Posetti and her team asked more than 700 women, mainly journalists and some political activists who are prominent on social media, about their experiences of online hate.

They then studied some of the accounts, including mine and that of Nobel Peace Prize winner, Maria Ressa. She's an investigative journalist from the Philippines who gets lots of online abuse and says she wears a bullet-proof vest because she fears being attacked.

"Online violence is really the new frontier of conflict that women face internationally," Posetti tells me.

Twenty per cent of women who responded to the UN's survey, in collaboration with the International Center for Journalists (ICFJ), said they had already experienced attacks in real-life, including stalking and physical assault.

I'm especially worried about some of the hate I receive online, including from a man who appears to have a prior conviction for stalking women. But I've been left frustrated at the police response. After a wave of abuse at the end of April this year, I reported the most serious threats to the police, including about sexual violence. It's a criminal offence to send messages online that are grossly offensive or obscene in order to cause distress.

An officer got in touch initially and I shared my evidence of the abuse - but I only heard back from her weeks later when she told me that she was moving teams, my case was being passed on and there had been no progress. I wasn't contacted by a new liaison officer until July - when it became clear that the evidence I'd shared originally with the police had been lost, something that was later admitted.

I tried to report another batch of rape threats, death threats and abusive messages at the end of July to the new officer. When we met in person in the middle of August, the officer admitted he was not the right person to handle the case - and that it should have been passed on to a specialist team. Yet more delays - and although the seriousness of the messages was acknowledged, there was little in the way of victim support.

By the end of August, I was on to my third police liaison officer - who asked me to review the portfolio of evidence I had already sent in, marking which messages were from Twitter, Instagram and Facebook as he wasn't sure how to use the platforms.

My latest liaison officer has requested more information from the social media sites - but still no progress.

According to data from several police forces, which Panorama obtained through Freedom of Information requests, over the past five years the number of people reporting online hate has more than doubled. But over the same period, there's only been a 32% increase in the number of arrests. The victims are mainly women.

This is happening in the context of increasing pressure on the Met in particular to do more to tackle violence against women on our streets after the killings of Sarah Everard and Sabina Nessa.

I raised concerns that people sending me abuse might turn up at my work - but I was just told to call 999 if I felt in danger.

The Metropolitan Police say they take online hate very seriously and that my case is under active investigation.

The National Police Chiefs Council says the police take all reports of malicious communications seriously and will investigate but must prioritise its finite resources. It says it can take action other than making arrests.

The solutions?

Draft proposals from the UN to get the social media companies to better protect women have been shared exclusively with Panorama. They are calling for social media platforms to introduce labels for accounts that have previously sent misogynistic abuse. They also want to see more human moderators taking the decisions about offensive material - and an early warning system for users if they think online abuse could escalate into real world harm.

"We would like to see gender-based online violence treated at least as seriously as disinformation has been during the pandemic by the platforms," explains Julie Posetti, who led the research that triggered these recommendations.

"I think we have to challenge," says Ruth Davidson. "I don't think that it is in anybody's interests for women who are consistently abused in a way that a man wouldn't be to let other young women who are online and seeing that abuse think that's just the way things are."

For women of all ages - myself included - that means refusing to be bullied off social media.

Latest Stories

-

Finance Minister to unveil Mahama’s 2025 Budget today

7 minutes -

Philippines’ ex-President Rodrigo Duterte arrested at ICC’s request over ‘drugs war’, government says

29 minutes -

Cowbell-GES Independence Day Debate: Gomoa Senior High Technical outshines Serwaa Kesse Girls’ SHS to win contest

45 minutes -

Geisha champions women’s empowerment and progress at 7th National Women’s Summit & Expo

56 minutes -

Musk’s Tesla facilities in US face ‘Takedown’ protests

1 hour -

Charity M. E. Adupong’s vision of promoting food security and empowering women in agricbusiness

1 hour -

Stocks fall in US and Asia over Trump tariffs concerns

2 hours -

Liverpool need best display of season to beat PSG – Slot

2 hours -

King and Kate return to annual Commonwealth service

2 hours -

US unveils new app for ‘self-deportations’

2 hours -

More than 80% of USAID programmes ‘officially ending’

2 hours -

Ayra Starr, Mofe-Damijo to join Idris Elba as cast of ‘Children of Blood and Bone’

2 hours -

Businessman, farmer granted bail for allegedly defrauding estate developer

3 hours -

Nigeria’s anti-graft agency recovers nearly $500m in one year

3 hours -

NDC Sowutuom branch organiser granted GH¢100,000 bail pending appeal

3 hours